Large Language Models (LLM) applications, like ChatGPT, are plagued by persistent inaccuracies in their output, usually called hallucinations. The “Godfather of AI,” Geoffrey Hinton thinks confabulation is the correct term in psychology.

Bullshitting is a feature, not a bug. Half-truths and misremembered details are hallmarks of human conversation: “Confabulation is a signature of human memory. These models are doing just like people.”

In a paper1 published this month, Michael Townsen Hicks, James Humphries & Joe Slater argue that ALL these falsehoods, and the overall activity of LLMs is better understood as bullshit in the sense explored by Harry G. Frankfurt.

the models are in an important way indifferent to the truth of their outputs. We distinguish two ways in which the models can be said to be bullshitters, and argue that they clearly meet at least one of these definitions. We further argue that describing AI misrepresentations as bullshit is both a more useful and more accurate way of predicting and discussing the behaviour of these systems.

Is this a problem?

In another preprint2 (not peer reviewed) published this week, “Confabulation: The Surprising Value of Large Language Model Hallucinations,” Peiqi Sui, Eamon Duede, Sophie Wu & Richard Jean So goes one step beyond (my emphasis):

This paper presents a systematic defense of large language model (LLM) hallucinations or ‘confabulations’ as a potential resource instead of a categorically negative pitfall. The standard view is that confabulations are inherently problematic and AI research should eliminate this flaw. In this paper, we argue and empirically demonstrate that measurable semantic characteristics of LLM confabulations mirror a human propensity to utilize increased narrativity as a cognitive resource for sense-making and communication. In other words, it has potential value. Specifically, we analyze popular hallucination benchmarks and reveal that hallucinated outputs display increased levels of narrativity and semantic coherence relative to veridical outputs. This finding reveals a tension in our usually dismissive understandings of confabulation. It suggests, counter-intuitively, that the tendency for LLMs to confabulate may be intimately associated with a positive capacity for coherent narrative-text generation.

Were you not bored of those boring Netflix series? Let’s embrace a weirder future and enjoy AI confabulations!

____________________

(1) Hicks, Michael Townsen, James Humphries, and Joe Slater. ‘ChatGPT Is Bullshit’. Ethics and Information Technology 26, no. 2 (8 June 2024): 38. https://doi.org/10.1007/s10676-024-09775-5.

(2) Sui, Peiqi, Eamon Duede, Sophie Wu, and Richard Jean So. ‘Confabulation: The Surprising Value of Large Language Model Hallucinations’. arXiv, 25 June 2024. https://doi.org/10.48550/arXiv.2406.04175.

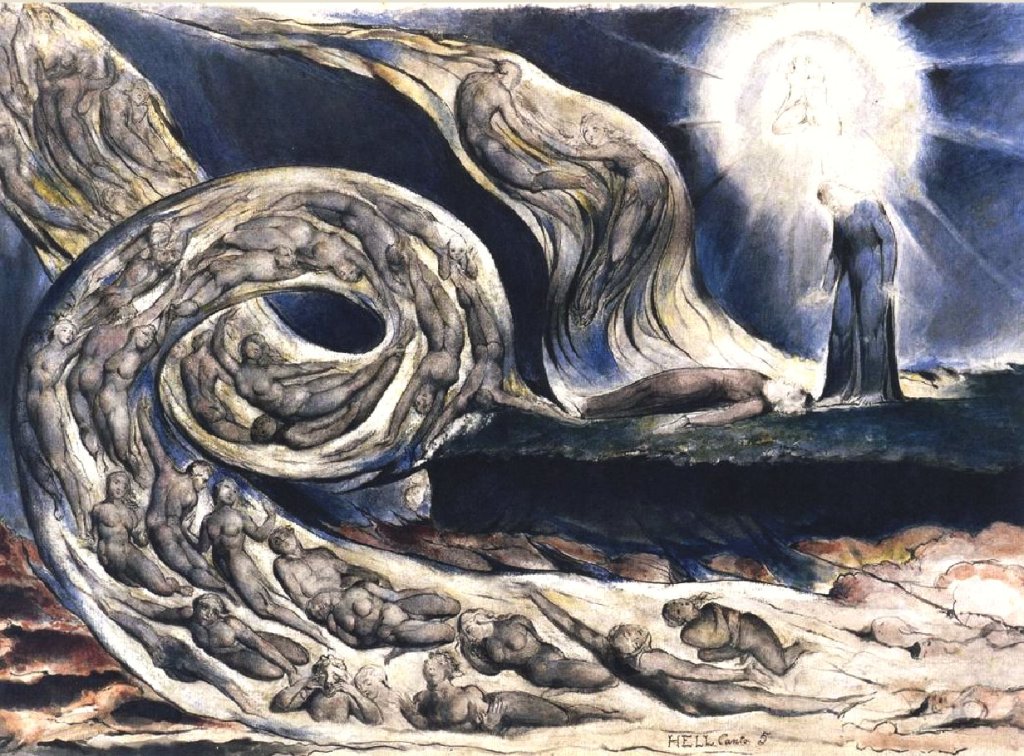

Featured Image: AI Confabulation? Nope. The Lovers’ Whirlwind (or The circle of the lustful), Francesca da Rimini and Paolo Malatesta, from Dante’s Inferno, Canto V. illustration by William Blake.

The Confabulations pre-print what, indeed, peer reviewed. It’s forthcoming at ACL.

[…] John Hopfield is a theoretical physicist, a towering figure in biological physics, with seminal previous work in the 1970s, while Geoffrey Hinton is a computer scientist and cognitive psychologist, winner of the 2018 Turing Award, often referred to as the “Nobel Prize of Computing,” and deeply (pun intended) involved in the current debate on AI. […]